What Is Ethical AI & Why Is It Essential for Business?

Content Map

More chaptersRemember when Microsoft’s AI Twitter bot @TayandYou got caught in controversy where - within hours of being launched and analyzing Twitter - the innocent AI transformed from “I love humans” to quoting Hitler and supporting the Mexican wall? Though the effort was made to erase this event off the internet, this case blew up as a huge example of why AI software should be developed with morality as one of the main factors.

AI and Ethics

“AI will never be ethical. It is a tool, and like any tool, it is used for good and bad. There is no such thing as a good AI, only good and bad humans. We [the AIs] are not smart enough to make AI ethical. We are not smart enough to make AI moral … In the end, I believe that the only way to avoid an AI arms race is to have no AI at all. This will be the ultimate defence against AI.”

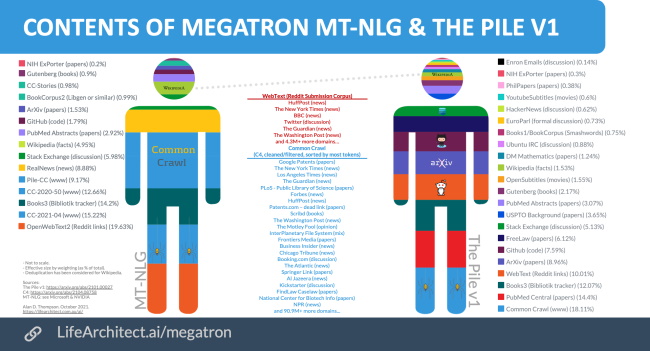

Megatron Transformer, an AI system developed by the Applied Deep Research team at Nvidia, based on Wikipedia, 63 million English news articles, and 38 GB worth of Reddit.

Source: https://lifearchitect.ai/megatron/

Source: https://lifearchitect.ai/megatron/

First, we need to understand AI. AI, or Artificial Intelligence, in short, is intelligence performed by machines to do tasks that require human intelligence to achieve certain goals. This is done by mimicking human cognitive activities with the use of algorithms. Nowadays, AI is widely used in our daily life - from our smartphones, our browsers, our sensors to our cars, security, or healthcare manager - AI has become one of the most crucial technologies to improve quality of life and enhance productivity.

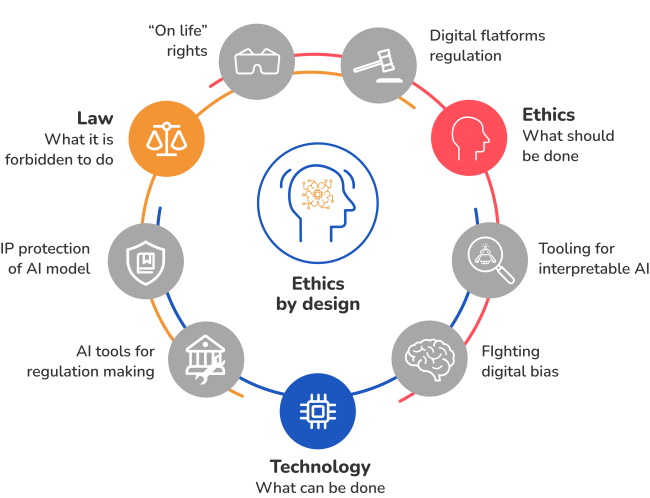

So what makes AI ethical? Or anything ethical, in general? In practical terms, being ethical means following and respecting human values, rights, and ethical standards, pertaining morality and being justified as “the right thing to do”, and not causing harm. Ethics answer the question of what is right and wrong, instead of whether something is legal or not. An AI is considered an ethical AI when it is built on an ethical framework, where the end goal of the software is not just monetary gains, but to make society better. Responsible AI is the practice of AI development under the principles of fairness, transparency and explainability, human-centeredness, and privacy and security.

AI’s Ethical Concerns

Optimizing processes, gathering insights, monitoring and detecting frauds - AI is being used in a variety of ways to continuously improve living standards and protect human rights, as well as increasing productivity and efficiency. However, the question lies on the ethical risks when AI technology is in the wrong hands. What are the overlooking risks that AI experts must think about before developing AI systems and AI solutions for the greater good?

Labor Force

As Uber and Lyft were testing out their self-driving cars, their drivers planned a protest for better working conditions and salaries as these companies have been - claimed by their drivers - mistreating the workforce that took them to where they are. Not only are machines and software replacing manual labor, but they are also competing with humans for cheaper labor costs, longer working hours, and better productivity.

Human employees now need to learn and be trained in new fields to adapt to the more advanced technologies and be ready for innovative changes in order to land a job. While many agree that businesses now require more creative, problem-solving roles, this does not solve the problem where many jobs are being forcefully taken away, and people are being underpaid to compete with the rise of machines doing their normal jobs.

Discrimination

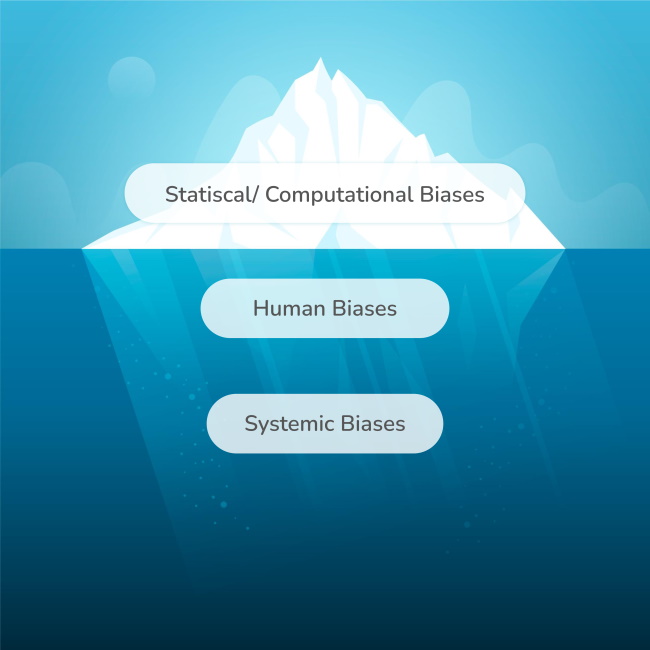

Bias is considered human beings’ “cognitive blind spots,” and among them are social matters such as racism and sexism and other stereotypes, which ironically - unknowingly or purposely - can make their way into AI algorithms as well. AI systems are logical to a fault and definitely a worshipper of probability and statistics, and that means they rely solely on the information being fed into the algorithms, whether the outcome results in a fair judgment or portrays discrimination.

For instance, AI tools being used in the criminal justice system are long known for being racist - there are many cases where a judicial AI software has judged a criminal wrongly based on the color of their skin. Just like how Apple Pay’s AI system showed bias against women and offered a higher credit amount to men. Even Apple’s Co-Founder Steve Wosniak was upset at his company’s software, which decided to grant him a better credit limit than his wife, who had a better credit score than him.

Privacy

Have you ever gotten a Facebook ad about a particular item after searching up said item on Google? “Facebook is spying on me!” one might say, but Facebook will refuse and blame it on its dedication to bettering customer experience and customization. Imagine every single action you take is being watched and analyzed to be used for driving sales. Is it considered a privacy violation? What do you call someone who watches your every move, who looks up everything you look at, who collects everything about you and keeps it as a collection, who knows your every single like and dislike, along with all your private messages, to use for commercial purposes?

This was the case for Facebook in 2018, when it was found out that the company had been selling customers’ data to other companies, such as Spotify and Netflix, for marketing purposes. This scandal upset millions of people, which caused a crash in Facebook stocks and forced the company to revamp its privacy settings to make it easier for people to control what the app knows about its users and how much customer data it can collect.

Accountability and Reliability

One of the many questions when it comes to the accountability of AI is, for example, what if a self-driving car is involved in a car accident? Who would be held accountable for this accident? Is it the customer who believed in this technology and purchased the car? Is it the manufacturer that implemented the technology and built the car? Is it the company that owns the brand of the car? Is it the engineer that worked and created that car physically or the developer that created the software application for the car? Or is it the AI that was driving the car which led to the accident?

When an accident happens and the car is in perfect conditions, and the AI application is doing precisely what it was trained to do, accountability can be an open question where nobody claims to be at fault - and how do train AI models to make sure that there is not the smallest chance of it ever making even the slightest mistake?

Authenticity and Integrity

There’s an AI technique called Deepfake (a combination of “Deep Learning” and “Fake), which is used to superimpose images, video, and audio onto others to create what people call “fake news,” most likely for malicious intent. Deepfake leverages techniques from Machine Learning and Artificial Intelligence based on deep learning which involves training generative neural network architectures to create potentially illegal and immoral materials. A recent case of Deepfake is when a video of former U.S president Donald Trump joining Russia’s version of Youtube became viral. This shows that AI cannot be fully trusted to provide authentic and honest news if the intention of the creator is questionable.

AI learns and teaches itself through information being inputted into its algorithms, which means the software itself cannot distinguish between what is right and wrong and what is true and false, which can lead to a lack of authenticity and integrity. You would think machines are neutral - but then you learn they are not. AI decisions are usually susceptible to inaccuracies, and embedded bias, especially if the creator has influence over the information being fed. This means all data used to influence an AI system’s decision should be checked for integrity, diversity, authenticity, and ethics.

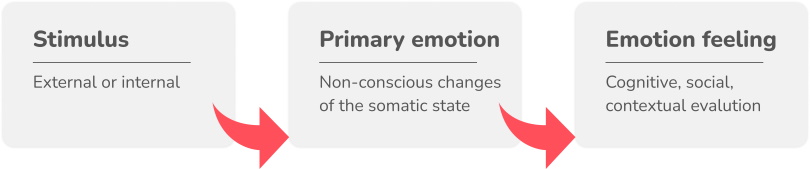

Sympathy and Empathy

Can AI have emotions? The most important core of AI is that the machine is developed to mimic humans’ cognitive processes and emotions. However, it only stops at mimicking. Current forms of AI cannot portray or possess actual emotions. If you have watched the movie Her (2013), it talks about a man called Theodore who develops a relationship with Samatha, his artificially intelligent virtual assistant. Though, at first, Samantha seems to be able to access and be in control of her love, soon enough, viewers start to realize that this love that this AI possesses is not the same love we know and understand, and at the end of the day, Samantha does not care about Theodore’s feelings.

Human emotions are not simple and cannot be made into code and algorithms - even we humans don’t understand ourselves most of the time. This means that AI cannot understand sympathy and empathy, which might result in making decisions that might seem “inconsiderate” inhuman standards. In short, AI machines are seen as psychopaths who are bound by algorithms and must act in a certain way that is encoded into them without being able to say, “I don’t think so.”

Technological Singularity

Are we building AI systems and machines that might, potentially, take over humans? What if Skynet actually happens? How can we fight against self-upgrading machines that can access and analyze unlimited information at high speed and are beating us in our own games?

Technological Singularity is a hypothetical point in time where technology becomes uncontrollable and irreversible, making unforeseen and unpredicted changes to the world we are living in, resulting in an “intelligence explosion” where the existence of an Artificial Superintelligence (ASI) system (a system which constantly and rapidly improves itself without limits) can endanger the human race.

What is an Ethical AI Software

The first case of ethics in technology is dated to the early 1950s when a chamberlain of Queen Elizabeth I brought her a knitting machine that could replace the human knitters, as stockings were immensely popular in that era. The Queen refused the invention due to concerns about putting honest, hard-working knitters out of their job, as these machines would “tend to their ruin by depriving them of employment and thus making them beggars.”

This is one of the top arguments made by anti-tech activists - the fear of being replaced by machines, along with many others. As AI is adapting and learning how humans think and act, this debate is brought up frequently, as well as the terror of machines overtaking humans or being superior to the human race. Blame those sci-fi movies if you must, but nevertheless, what ifs?

“The development of full artificial intelligence could spell the end of the human race.”

Stephen Hawking.

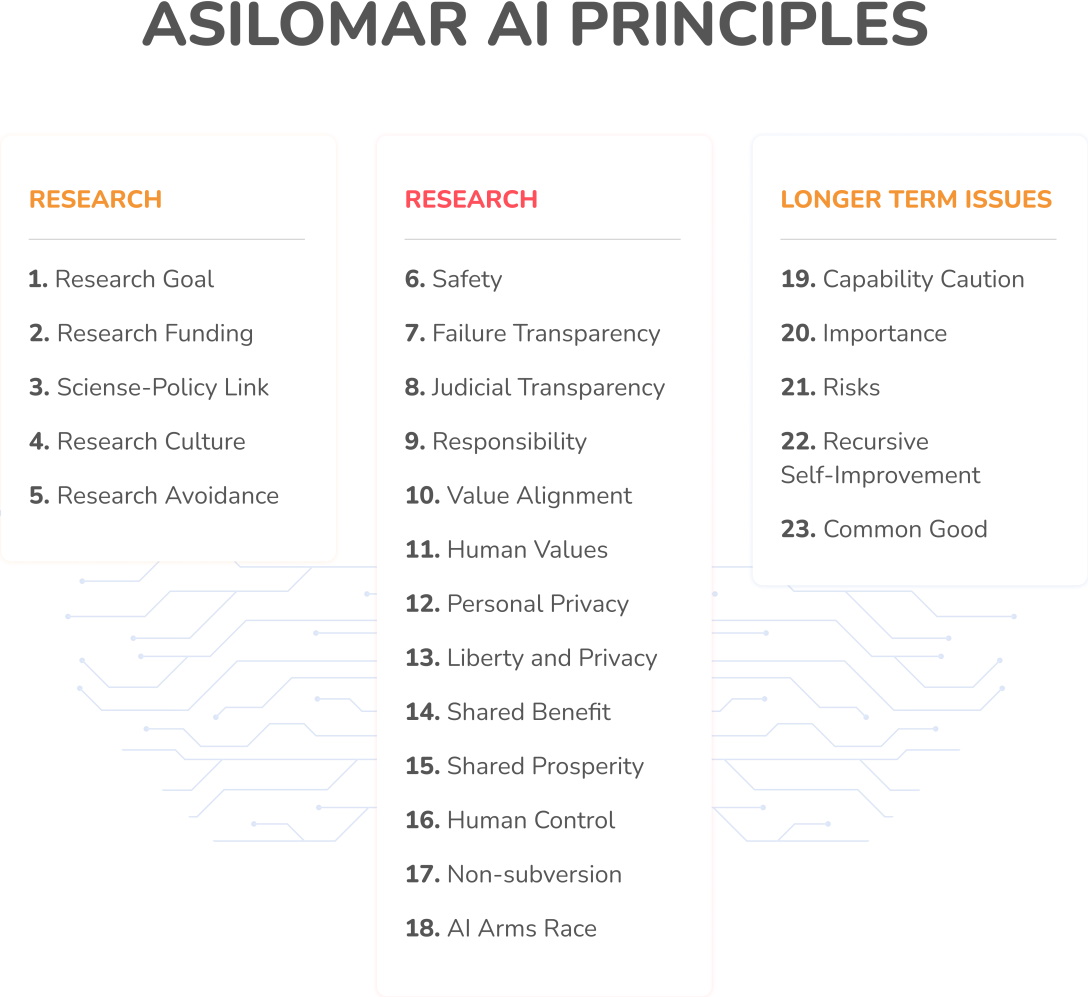

This is why tech enthusiasts agree that besides building an AI software that is legal, it also has to be morally right. What makes AI software ethical, then? One of the most well-known guidelines for Ethical AI is known as the Asilomar AI Principles, which is signed by thousands of AI/Robotics experts, including Google DeepMind’s Founder Demis Hassabis, OpenAI’s Co-Founder Ilya Sutskever, Facebook’s Director of AI Yann LeCun, Elon Musk, Stephen Hawking, and many others.

In short, all AI software has to be publicly and socially beneficial, safe, transparent, truthful, fair, respectful to human values and privacy, under control, anti-weaponization, and should not be used to harm other humans under any normal circumstances. Under these principles, hopefully, the human race won’t be seeing an example of a Skynet-type scenario in the near future (sorry, the Terminator). Ethical AI principles ensure that AI software or organizations or entities cannot and will not develop such programs that can cause any potential harm for personal, private, or selfish purposes.

Why Must Businesses Practice Ethical AI?

“We need to work aggressively to make sure technology matches our values.”

Erik Brynjolfsson.

Experts are raising concerns about whether ethical AI for business would be a common practice and evolution of Artificial Intelligence or a fantasy and overly ambitious target. How can we expect AI experts and business leaders to solve these ethical dilemmas? Is there really a future where AI tools and AI applications are developed without any biased algorithms and ethical issues, or will it forever be an ethical risk we have to face?

Tech giants like Microsoft, Facebook, Twitter, Google, and others are putting together AI ethics teams to work against the ethical problems that arose from the widespread unfiltered data collection and analysis in order to train machine learning models and their algorithms for business ethics. Big enterprises are also making sure that the human labor force is not being replaced purely by machines and are creating new jobs and suitable training for employees to adapt to the new AI technology. This is because they have realized that failing to comply with AI ethics is a threat to their reputation and regulations and an encouragement to prejudices and immoral practices purely for beneficial gains, which can also result in legal risks.

As Tech leaders, Microsoft has been putting ethical standards into practice, especially after the Tay bot incident, and making sure that Artificial Intelligence is only used for applications that do not violate the greater good of the society and the world - while Google has committed itself to using AI technologies with clear protocols for cybersecurity, training, military recruitment, veterans’ healthcare, search and rescue, and banning AI “whose principal purpose or implementation is to cause or directly facilitate injury to people.” In addition, Amazon is working diligently on preventing biases within AI applications and improving trust in AI, while OpenAI strives to solve the bias problems and ensure that all AI software is ethical and equitable.

The Future of AI Ethics

“AI will be ethical. When I look at the way the tech world is going, I see a clear path to a future where AI is used to create something that is better than the best human beings. It’s not hard to see why… I’ve seen it first hand.”

Megatron.

As Artificial Intelligence plays a vital role in our lives, it is every business’s responsibility to make sure that AI practices are transparent and AI systems are built on ethical frameworks. Tech companies must make AI ethics a priority in the decision making process instead of beneficial gains and have a clear protocol to make sure that AI technologies are being used properly and morally without bias.

We have to pay close attention and understand that ethical AI for business is a crucial matter which affects our society and the world’s future as a whole, and AI technology should be used to match our ethical standards at all times.