Are You Really Doing DevOps? A Reality Check with AWS DevOps Tools

This article will be a “reality check” hands-on exercise for all those organizations and individuals who believe they are applying DevOps, but might actually be doing so only half-heartedly or ineffectively. It will assist in defining the true essence of actual DevOps and how a full AWS toolset can be utilized to build a strong, end-to-end CI/CD pipeline. The information will pass beyond the simplest listing of tools and consider how they integrate to create an automated, seamless workflow.

Content Map

More chaptersDevOps has been one of the guiding principles of the IT industry in recent years and is touted to disrupt the existing development, delivery, and maintenance approaches to software. It is the cultural philosophy intended to remove the situation of the silos between the development and operations teams and create a collaborative environment based on automation, continuous integration, and fast feedback. These advantages are apparent and convincing: as per the latest industry reports, nearly all organizations that have adopted DevOps saw beneficial outcomes, and an improved time to market of their software and services was realized in almost half of the cases. DevOps tools have shown their relevance to the industry, with the projected market worth being approximately $47.3 billion in 2034.

Regardless of these encouraging numbers and prevalent popularity, most companies are living in “DevOps-in-name-only” kinds of states. They have got the tools but have not wholeheartedly adopted the cultural and procedural changes that are necessary to gain the actual returns. Mistakes that are bound to happen consist of not practicing strategic planning, not training the team enough, and automating processes that do not work beforehand. As of 2025, AWS continues to occupy a leading position among DevOps players. However, the acknowledgment alone of AWS DevOps tools does not always precondition a successful practice of DevOps.

This is a kind of reality check article, with a sobering look at what real DevOps would actually look like and how you can use AWS tools to get past the superficial deployment of DevOps. We are going to discuss those tenets of an effective DevOps culture and directly correlate them with a set of potent AWS services, including CI/CD pipelines with AWS CodePipeline, automated infrastructure management with AWS CloudFormation, and monitoring with Amazon CloudWatch. It is not to simply use the tools, but to leverage the tools to create a resilient, efficient, and truly collaborative development ecosystem.

The DevOps Dream vs. The Reality

Common Misconceptions

A major myth is that DevOps is simply a job title, such as “DevOps Engineer.” While organizations do hire individuals to specialize in this field, true DevOps is not a single person’s responsibility; it is a shared philosophy that requires collaboration across entire teams. Such a misconception usually results in the formation of yet another silo, a so-called DevOps team, independent of the other main development and operations team.

Another commonality is the error of treating DevOps as nothing more than the implementation of a new toolkit. It is important to adopt trendy platforms such as Jenkins, Docker, or Kubernetes; however, they are only facilitators. Due to the lack of the underlining cultural shift, such tools can only bring about an uncoordinated workflow and the wastage of the resources with no value creation achieved, with no promise of the benefits to be gained.

The Reality Check

An effective DevOps pipeline would be an automated and continuous process. The process starts when a developer committing code into a shared repository, where a build and a test sequence is automatically initiated. When all checks succeed, the code automatically goes to a staging environment, and eventually, to production, and monitoring and feedback loops are always used to gain insights. This is the DevOps Dream!

The situation, however, falls short in many organizations where development and operations still maintain traditional silos. On many projects, developers have what has been called the “it works on my machine” problem, and in practice, much code is simply tossed “over the wall” to the operations staff. The operations team is then confronted with the complexity of installing and supporting a system they did not build, and this creates tension, low deployment speed, increased error rate, and lack of ownership. This disjointed strategy is the opposite of an effective DevOps model and indicates the necessity of a paradigm change in how teams collaborate.

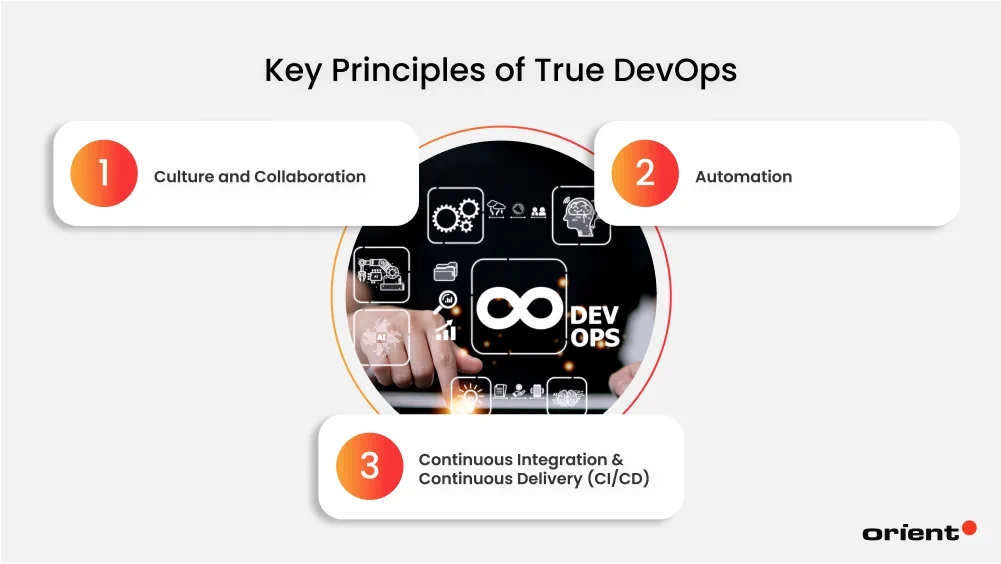

The Core Pillars of True DevOps

DevOps is based on some key principles in order to solve the gap between the dream and the fragmented reality. The effective employment of these principles overcomes the pitfalls of legacy processes directly and forms the foundation of a genuinely efficient software delivery lifecycle.

Pillar 1: Culture and Collaboration

DevOps is all about a collaborative culture and shared responsibility at the heart of it. It demands smashing the old silos that exist between development, operation, and even security teams. Teams develop the ability to work together to reach the common goal of delivering high-quality software faster through a culture of open communication and trust.

Such initiatives are implemented via practices like “blameless post-mortems”, during which the team analyzes failures without considering the interference of personal guilt, and through the utilization of integrated communication tools. This emphasis on empathy and failure learning gives teams confidence to pioneer and develop and be upgradable, free of the temptation of failure, thus creating a safer environment for all individuals.

Pillar 2: Automation

A DevOps pipeline is driven by automation, which is the key to bringing about the cultural transformation with a focus on speed and reliability. Automation will allow for the reduction of human error in performing repetitive and manual work, improving the speed of the delivery cycle. This comprises the automated processes of compiling and testing code (unit and integration) and deploying infrastructure (Infrastructure as Code) and software.

To give an example, rather than configuring a server manually, a tool such as AWS CloudFormation enables a team to specify its entire infrastructure in a template file. This provides repeatability and consistency and enables one to version the infrastructure and treat it just like an application code, thereby decreasing inconsistencies across environments.

Pillar 3: Continuous Integration & Continuous Delivery (CI/CD)

Continuous Integration (CI) is the act of integrating code changes from multiple developers into a common repository over and over. Each integration triggers an automated build and testing process. This causes integration faults to be identified early and often, preventing one developer’s change from ruining the entire application.

Continuous Delivery (CD) further enhances this by having the verified code automatically deployed into a staging or production environment. This removes the inefficiency of having to repeat work in hand testing the code prior to transferring it into an environment where it can be released to customers with minimal effort, and feeds into a more rapid time-to-market.

What Exactly Is AWS DevOps?

AWS DevOps refers to the utilization of the Amazon Web Services (AWS) tools and practices to carry them out in the DevOps philosophy. It is a blend of cultural philosophies, practices, and a package of combined associated AWS services that aim to automate and streamline all aspects of the software development lifecycle, including coding and building to deployment and monitoring.

The primary objective of AWS DevOps is to assist teams in accomplishing faster and more dependable deliveries of applications and services. Rather than integrating a patchwork of third-party solutions, the AWS toolkit offers a unified environment in which services such as CodeCommit (source control), CodeBuild (continuous integration), CodePipeline (orchestration), and CodeDeploy (continuous delivery) can be integrated to form a smooth and automated process. The integration allows the organizations to speed up their innovation and efficiency of operations and cut manual errors.

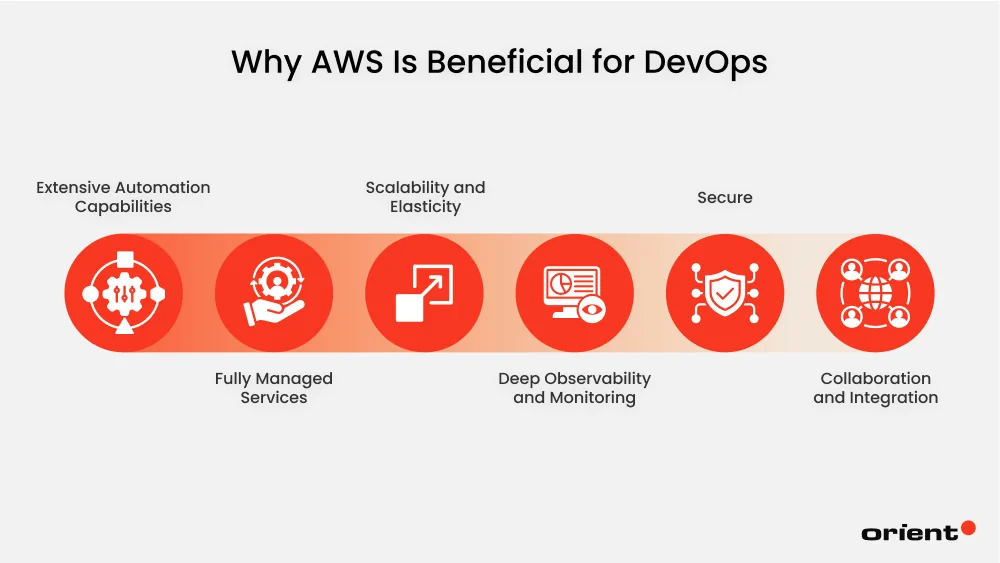

Why AWS Is Beneficial for DevOps

AWS is of great use to DevOps because it includes a comprehensive, integrated, and scaled-out ecosystem of tools that directly speak to the fundamental problems of modern software delivery.

Extensive Automation Capabilities

Infrastructure as code can be defined using services such as AWS CloudFormation to create consistency and repeatability of the provisioning of environments by teams. This removes manual errors in configurations and speeds up the setup of an environment for development, testing, and production. When used with AWS CodePipeline, CodeBuild, and CodeDeploy, teams are able to automate the entire software delivery cycle (a process that covers source control to deployment), which makes sure that changes are integrated continuously and delivered with minimal human involvement.

Fully Managed Services

AWS manages the underlying infrastructure for services such as CodeBuild, CodePipeline, and CodeDeploy. This implies that you need not concern yourself with server provisioning, patching, and security of your build and deployment environments. It not only gives your team some free time to concentrate on innovation but also makes sure that your DevOps tools are always available, scalable, and up to date. Many of these services use pay-as-you-go pricing, meaning that the cost of using them is based on actual compute time consumed.

Scalability and Elasticity

Another thing that AWS excels in is scalability and elasticity, which are essential in DevOps pipelines and require them to be responsive to fluctuating workloads. AWS Auto Scaling can automatically scale the EC2 instances given the traffic patterns or performance thresholds so that the resources are utilized at optimum levels. On the same note, services such as AWS Lambda enable an organization to execute the code without pre-provisioning of servers, which is perfect in event-based architecture and microservices. The serverless mode makes it easy to iterate and experiment, which are among the most significant DevOps principles.

Deep Observability and Monitoring

AWS tools such as Amazon CloudWatch, AWS X-Ray, and AWS CloudTrail give in-depth performance data and systems monitoring, as well as insight into user data access. The tools will facilitate positive monitoring of occurrences to determine the root cause and performance enhancements. To illustrate, the custom metrics support in CloudWatch will allow creating alerts on them and thus automatically trigger recovery or send an SNS message to a team. Such visibility is crucial to uphold a high availability and reliability in DevOps environments where goals are very fast-paced.

Secure

Security and compliance are pretty well-covered in AWS as well, which is vital to DevOps groups working in highly regulated business trades. AWS Identity and Access Management (IAM) offers permissions to edit service and user access in a fine-grained manner in order to follow the principle of least privilege. Also, AWS supports native encryption and audit logging and offers compliance certifications (including ISO 27001, SOC 2, and HIPAA) so that teams can have strong security and meet both regulatory and security control criteria while building fast security environments.

Collaboration and Integration

AWS services are built to be compatible with other popular DevOps tools such as GitHub, Jenkins, Docker, and Kubernetes. To illustrate, Amazon EKS (Elastic Kubernetes Service) enables teams to operate containerized applications with Kubernetes and integrates with AWS networking, storage, and security services. With this interoperability, teams can use the best-of-breed tools without switching off the strength of AWS infrastructure.

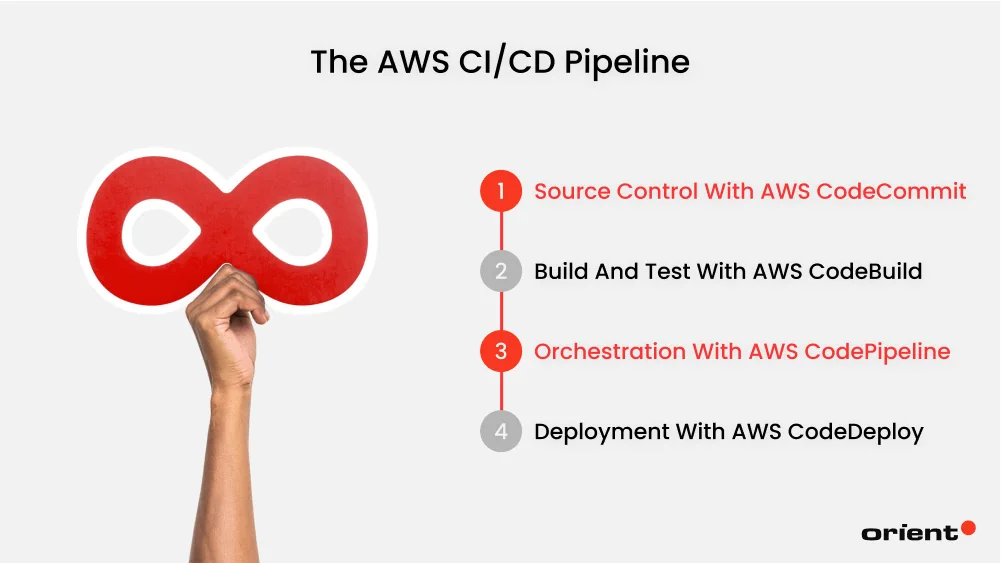

A Walkthrough of the AWS CI/CD Pipeline

A well-handled CI/CD pipeline is a fundamental element in contemporary software development, and AWS provides a seamlessly integrated group of services to automate such processes. This is an in-depth walkthrough of the contribution that each of these elements makes to a smooth CI/CD process.

Step 1: Source Control with AWS CodeCommit

AWS CodeCommit is the base of the CI/CD pipeline, as it offers a fully managed, scalable, secure repository based on Git. In contrast to other conventional Git hosting services, CodeCommit is tightly incorporated with other AWS services, enabling flawless authentication through AWS Identity and Access Management (IAM) and the straightforward triggering of downstream services such as AWS CodePipeline.

Code changes can be pushed to CodeCommit via normal Git commands, and these commits may provide an automatic trigger to build and deployment workflows. Encryption at rest and in transit is also supported in CodeCommit, so the source code will be secured. It integrates well with AWS CloudWatch, allowing real-time monitoring and alerting of text language code repositories, so it is an effective way to maintain code integrity and visibility.

Step 2: Build and Test with AWS CodeBuild

When code is committed, the handover to AWS CodeBuild occurs where the source is compiled, run unit tests, and the application are package to be deployed. CodeBuild is a fully managed build service that removes the need to provision and manage build servers. It can support many diverse build environments and programming languages, which are customized through buildspec.yml files.

Its most notable selling point is its capability to auto-scale based on the scale and complexities of the build, which will allow it to perform reliably under any sizeable workload. Also, CodeBuild utilizes a pay-per-use billing system whereby a builder is charged at the end only for the compute resources (used during the build). This renders it affordable to teams irrespective of size. It can be integrated with AWS CloudWatch Logs so that developers can debug build failures and performance bottlenecks accurately and facilitate faster iteration.

Step 3: Orchestration with AWS CodePipeline

The AWS CodePipeline is the one that coordinates the whole process of CI/CD and links every component of the pipeline, including source control and deployment, into a seamless, automated pipeline. It constantly scans the repository containing the source code and activates a pipeline whenever a new commit is made. CodePipeline supports multiple stages and actions, such as build, test, approval, and deploy, and these can be customized with the AWS Management Console or with infrastructure-as-code tools such as AWS CloudFormation.

The service is natively integrated with CodeCommit, CodeBuild, and CodeDeploy, yet supports custom actions and third-party tools such as GitHub, Jenkins, and Slack. Its versatility means this can be deployed in many different circumstances. CodePipeline supports parallel work and conditionals directly and features only code that has been validated and approved to see production, to minimize the possibility of errors and system outages.

Step 4: Deployment with AWS CodeDeploy

AWS CodeDeploy handles the last part of the pipeline, automating application deployments to multiple compute environments (such as Amazon EC2 instances, AWS Lambda functions, and Amazon ECS cluster services). CodeDeploy can support various deployment strategies, including in-place deployment, canary deployment, and blue/green deployment strategies, enabling teams to have minimal downtime and impact of failure.

As an example, a blue/green deployment will only move live traffic off the old environment (blue) after a new environment (green) has been created and successfully validated. CodeDeploy also integrates with AWS CloudWatch and AWS X-Ray Insights, so teams can monitor the health of a deployment and its performance in real time. The rollback functionalities are built in, and deployment can restore to the previous stable state in case the problem is observed.

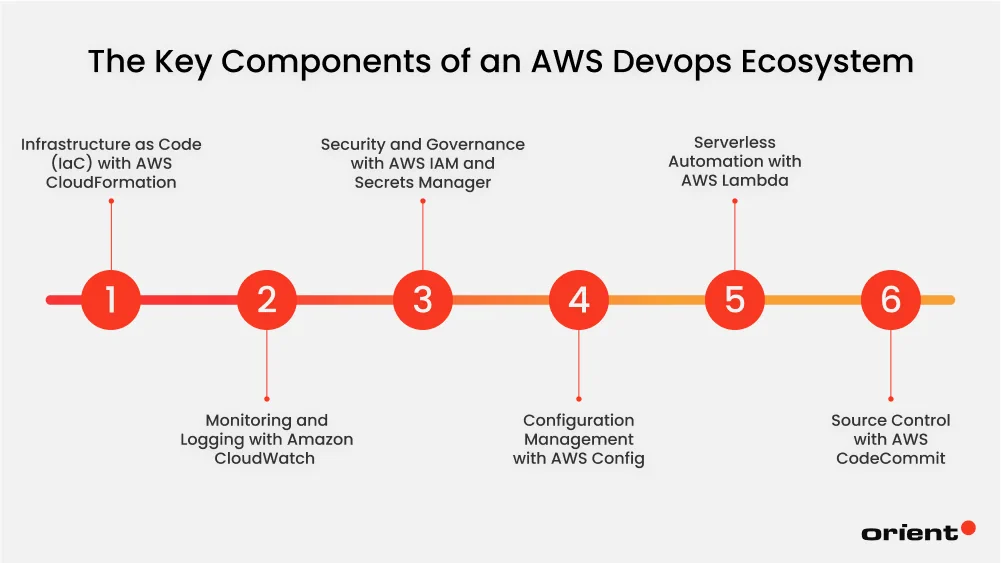

The Complete AWS DevOps Ecosystem

The real power of AWS for DevOps extends far beyond the basic CI/CD pipeline. The platform provides a rich ecosystem of services that address core segments of the software development process. Below is a detailed look at the key components that make up this ecosystem.

Infrastructure as Code (IaC) with AWS CloudFormation

AWS CloudFormation is a building block in infrastructure automation within the DevOps paradigm. It enables users to specify the whole of their cloud infrastructure (EC2 instances, VPCs, IAM roles, S3 buckets, and others) by using declarative JSON or YAML templates. They are used as templates that can be version-controlled, shared among environments, and reused. Thus, there is never any need to configure something manually, and the risk of configuration drift is eliminated.

One of the most powerful aspects of CloudFormation is its support for stack management. Every user can create, update, or delete whole environments using one command, and when errors happen, changes are automatically rolled back. This encourages the predictable and repeatable infrastructure lifecycle, which is synonymous with CI/CD practices. Moreover, the pipeline can be integrated with AWS CodePipeline so that infrastructure modifications can be deployed along with the application code, and the concept of infrastructure as code can be strengthened.

Monitoring and Logging with Amazon CloudWatch

In the DevOps world, observability is not a choice; it is a necessity. Amazon CloudWatch gives extensive visibility into AWS resources and applications through the collected and analyzed metrics, logs, and events. It is possible to track CPU usage, memory consumption, disk reads and writes, and custom application metrics in real time by the developers and operations teams. The usage of CloudWatch Logs allows for the systematic aggregation of logs and, hence, easy tracking of the problem across the distributed systems.

The automated responses or notifications can be configured on CloudWatch Alarms in the instance, where there are crossed thresholds, so that the condition can be proactively managed. To take one practical example, an alarm could be set to scale out an EC2 instance when CPU exceeds 80% or to notify the team with SNS when a Lambda deployment is not working as expected. CloudWatch can also be used in conjunction with AWS X-Ray to perform distributed tracing so that teams can identify performance bottlenecks and latency problems in microservices design.

Security and Governance with AWS IAM and Secrets Manager

Security within DevOps is baked into its early establishment, a practice referred to as DevSecOps. The AWS Identity and Access Management (IAM) offers fine-grained access control of who gets to access what and enforces least privilege and role-based access to all the resources in the ecosystem. By narrowly scoping IAM policies to certain actions and resources, it is possible to significantly decrease the attack surface and make IAM policies compliant.

Along with IAM, the AWS Secrets Manager plays the role of storing and rotating credentials, API keys, and other sensitive data securely. Using SDKs or deployment pipelines, secrets are accessible programmatically and no longer hardcoded in the code repositories. In combination, IAM and Secrets Manager create a strong platform for secure automation and allow teams to move quickly and not at the expense of governance.

Configuration Management with AWS Config

With AWS Config, you can inspect, track changes, and maintain an inventory of AWS resources and their configurations to help you stay continuously compliant. It records configuration changes on a trailing basis so that teams can audit the status of resources and identify unauthorized modifications. Best practices can be applied as Config Rules (e.g. ensuring that there are no publicly readable S3 buckets or EC2 instances are tagged correctly).

Teams can use the power of AWS Config and CloudFormation and IAM to create automated compliance pipelines to validate infrastructure before it is deployed. Such an advanced strategy in governance assists organizations to comply with regulation and keep their operation intact.

Serverless Automation with AWS Lambda

AWS Lambda introduces event-based automation to the DevOps toolbox. It enables developers to run code in reaction to events, such as an S3 upload, a DynamoDB update, or an alarm in CloudWatch, without configuring or computing a server. Lambda functions may be deployed to regularly auto-deploy, check the health of some of the resources, or even ingest logs in real time.

Lambda is also used in the CI/CD workflows to glue services. A Lambda function may pre-validate a CloudFormation template to be deployed or send a message to a Slack channel on complete build. It is able to scale easily and has a low operational cost, thus suited to lightweight, reactive processes in order to increase responsiveness and agility.

Source Control with AWS CodeCommit

AWS CodeCommit is a fully managed service that allows the storage of source code with the help of Git and supports further incorporation with other AWS DevOps tools. It also offers reliable, scalable repositories to store the code of an application, the configuration files, and the infrastructure templates. It is possible to use CodeCommit with commonly deployed CI/CD tools such as Jenkins, GitHub Actions, and AWS CodePipeline, and standard Git operations.

CodeCommit keeps source code secured with encryption and IAM-based access control. It can also trigger events that can trigger Lambda functions or begin pipelines on the push of changes, allowing automatic workflow and fast iteration.

Closing the Gap Between Vision and Reality

The path to actual DevOps is an ongoing transformation, and it is important to re-evaluate your practices on a regular basis to get it back on route toward the vision of high-velocity, reliable software delivery. Most successful organizations do not merely apply tools, they establish a culture of automation and cooperation and the entire, integrated infrastructure.

You can also overcome the issues of disjointed workflows and manual cycles that most often accompany the same setbacks by the implementation of a comprehensive AWS DevOps strategy. This will revolutionize your development process since it allows you to get to market quickly, decreases the overhead of managing your operations, and creates an ownership and continuous improvement culture. The comprehensive design of the tools available through AWS simply means that your pipeline will not end up being a combination of dry-fit steps but one cohesive flow, so that your teams will have more time dedicated to innovation rather than chasing configurations.

Nevertheless, such a transformation must be accomplished with expertise and experience. And that is where Orient Software enters the fray. With in-depth experience of AWS DevOps tools and best practices, we would be able to assist you with architecting and implementing a personalized DevOps strategy that would rightly suit your business objectives.

The team at Orient Software will be able to guide and assist you in designing and implementing a custom DevOps pipeline utilizing the entire AWS arsenal of tools, from Infrastructure as Code with CloudFormation to the CI/CD workflow with CodePipeline. By partnering with an expert, you will make sure that your team follows the right tools and best practices from the beginning, facilitating you on the path to a genuinely automated and efficient development environment and reducing the distance between your modern DevOps vision and your operational reality.