Which Types of AI Agents Are Best for Your AI Project?

As AI continues to evolve, understanding the different types of AI agents becomes crucial for building intelligent systems that think, learn, and act autonomously. This article discusses the suitability of each agent type for particular project objectives and how selecting the appropriate one can determine the success of your project.

Content Map

More chaptersAssuming that artificial intelligence were a city, the citizens would be the AI agents who would be 24-hour workers to ensure that everything is running smoothly. From customer support chatbots, which answer questions within a few seconds, to self-driving vehicles going down complicated roads, these agents are already changing the way we live and work. In the view of McKinsey, AI agents might add up to $15 trillion to the global economy by 2030 through intelligent automation, which is evidence that they are not mere algorithms but the digital-age invisible workforce.

However, as companies scramble to introduce AI agents to perform monotonous duties or to automate complicated operational processes, few realize that they are not as similar as some would assume. Certain of them operate based on preset rules and respond immediately to sensor data. Others use internal models, machine learning algorithms, or utility functions to make wise decisions in dynamic environments. There is a wide gulf between a basic rule-and-follow bot and a fully autonomous AI agent that can adjust to real-time conditions. The ability to comprehend that spectrum is what makes the difference between successful AI projects and expensive experiments.

Before deciding the way to create AI agents or incorporate them into business processes, this is a good question to ask: What type of agent are you actually deploying and what can it do? We answer this in the following sections by dissecting the types of AI agents, how they operate, and why it is essential to select the appropriate one to achieve an AI that not only works but also evolves.

What Are AI Agents?

An AI agent isn’t merely a piece of code, it’s an autonomously directed system that is able to perceive the environment, to reason about what it senses, and to act autonomously towards a goal that is observationally defined. These intelligent agents do not just act on commands but make decisions according to circumstances, experience, and changing data, which demonstrates how AI agents work in real-world scenarios. Awareness and adaptability are what make AI agents different from traditional software.

Once you have a sense of an AI agent concept, the following question isn’t how smart it may be, but how smart it must be. All intelligent agents act at some degree of autonomy: some simple reflex agents respond immediately to stimuli, whereas others, such as model-based agents, learning agents, or utility-based agents, do reason, plan, and learn by experience. The difficulty is in aligning that intelligence with your problem at hand, since when things don’t go right, the price of being mismatched multiplies fast. A super-powered autonomous AI agent, full of reasoning and learning functions, can use up time, information, and computational resources on what should be done in simple reflex mode. That is why the most intelligent AI projects do not pursue the most sophisticated models; rather, they select the most suitable AI agents.

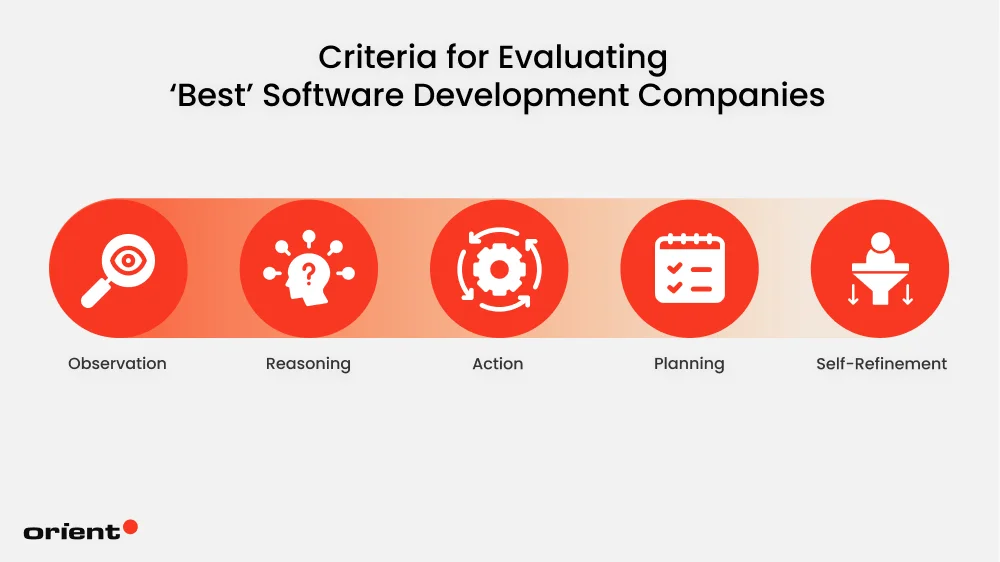

Key Features of an AI Agent

Each effective AI agent has a collection of capabilities behind it that work effectively like an ecosystem, each feature complementing and enhancing the others.

Observation: Real-world data is partial, messy, and generally misleading, particularly in partially observable environments. Powerful agents do not strive to observe everything; they learn to ignore noise and pay attention to cues that drive results, basing their sensor data, historical data, and previous interactions on gathering data, recognizing patterns, and making wise decisions. Observation is not about gathering additional data; it is about purposeful perception, as in natural language processing to solve customer questions or computer vision in self-driving cars.

Reasoning: Advanced agents reason with bounded reasoning: they balance the cost of reasoning with the benefit of action, and do so through a utility function to compare prospects, and agents compare states. This capacity to reason sufficiently is important in dynamic environments, and this characteristic sets unlike reflex agents or unlike simple reflex agents apart from more sophisticated model-based reflex agents. The most effectively functioning agents understand that intelligence is not unlimited analysis but timely decision-making, including search and planning algorithms to make decisions and get the desired outcomes.

Action: Every choice alters the environment and provides feedback that the agent can learn, since the AI agent makes its next step based on a performance factor. The optimal agents view all actions as experiments: in case the outcome is not what was expected, they automatically reset their controls, aiding them in accomplishing tasks, automating repetitive tasks, and self-directed tasks. In the long run, this forms a continuous loop of feedback wherein each action refines the next performance on the side of the agent and how agents sustain their performance in the daily chores or in special duties or activities.

Planning: Intelligent agents plan the change; their plans are modular, flexible, and reactive. They do not find the perfect plan, but the plan that can be developed frequently through goal-oriented agents who consider paths to follow or behavior-oriented plans. The actual ability is not to foretell each action, but to react promptly so that situations can evolve and a multi-agent framework can arrange a coordinating and competitive reaction between multiple agents or multiple AI representatives.

Self-Refinement: Doubting their own logic enables an agent not only to get better results out of it but also to enhance its decision system, which can be fueled by a learning component and machine learning methods. The agent is able to challenge its previous assumptions through meta-learning and reflection, whether by creating AI agents or implementing AI agents that can evolve through an internal model. This is expanded to several autonomous agents, where both the lower-level agents and the higher-level agents communicate an interaction with other agents or other AI agents, even in conjunction with human agents in customer service chatbots or resource allocation systems.

The combination of these abilities forms a continuous loop of observe, reason, act, reflect that is the essential attribute of agentic intelligence in artificial intelligence and agent technology. What distinguishes the tier of AI agents is how elegantly they strike the right balance of accuracy, flexibility, and awareness in motion, be it responding to user requests, predefined rules, or advanced AI systems across multiple agent types and AI agent types.

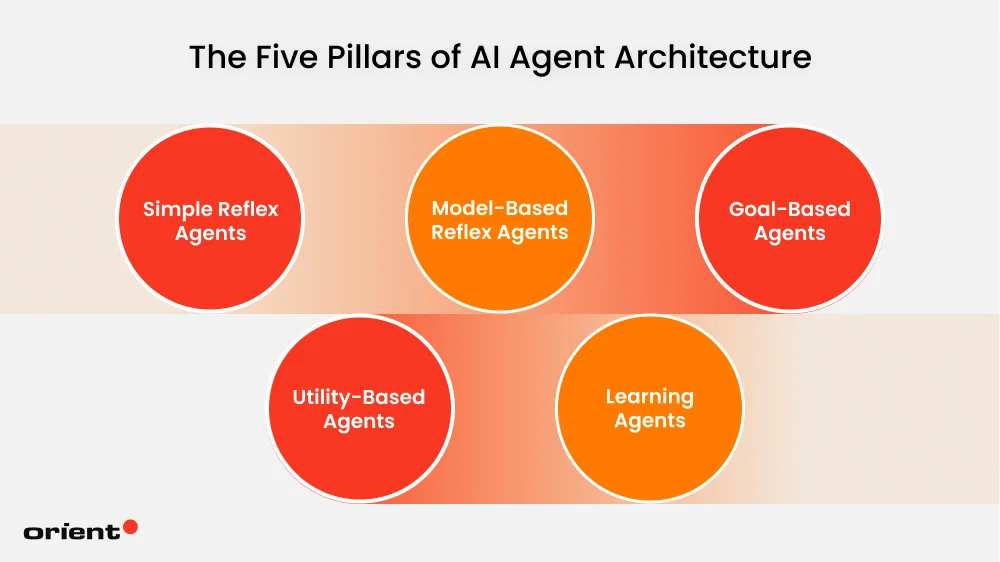

The Five Pillars of AI Agent Architecture

Simple Reflex Agents

Consider a simple reflex agent to be the reflex of an AI system; it responds immediately to whatever it perceives without thinking about the past or the future. Just like when you touch a hot stove, your hand withdraws before your brain can even process what has occurred. The agent also acts in the same manner: it will act in accordance with its immediate environment state only by applying simple rules of if-then fashion to determine what to do next.

This form of AI is most suitable when there is a foreseeable and entirely observable case of a scenario, when one knows all the potential conditions and results. No rationality and adjustment are needed, and quick and steady responses are sufficient. The spam filter works on the same logic in the digital world. The agent will immediately transfer the email to the spam folder if that email includes such phrases as “free money” or “urgent click here.” It doesn’t study or interpret your preferences; it just carries out the rule whenever the trigger is presented.

Simple reflex agents are not intended to be clever; these are intended to be quick, steady, and consistent. They constitute the major portion of the intelligence in AI architecture, the type of reasoning that keeps things safe and predictable, and on which more complicated reasoning or learning can be superimposed.

Model-Based Reflex Agents

Since the simple reflex agent can be compared to an individual who responds by instinct, a model-based reflex agent can be compared to a person who recalls the last action that occurred and then makes a move. It does not simply do what it encounters now, but also has a small picture of how the world operates within its “mind”. That internal model assists it in situations where the environment is not always clear or completely visible.

An ideal real-life scenario is one where you are driving on a foggy road. You cannot see too far ahead, and yet you will make decisions on what you saw a few seconds ago: the last turn, the position of the car, and the road sensation. A model-based agent does precisely this: it fills in holes left by the current input with an internal state constructed out of the past information it has obtained. This agent works well in conditions that are dynamic but share similarities with the previous conditions.

Concisely, model-based reflex agents introduce memory and context to the equation. Their reaction is not simply a response but is aware of what preceded it. It is a minor level of complexity but an enormous step towards creating AI systems that act more like people: observant, contextual, and somewhat thoughtful with each choice.

Goal-Based Agents

Whereas reflex agents are an automatic process, goal-based agents are an intentional process by strategizing their way through the environment. Rather than posing a question, “What should I do right now?” these agents pose a more significant question: “What should I do to achieve my goal?”

Goal-based agents use planning and search algorithms to determine what sequence of actions they should take to get them out of their current state to the desired state. Due to that, they are most effective in settings where actions are predictable, while the way towards the goal is not evident. The easiest example is GPS navigation: you key in your destination, and the system analyzes the various routes, distances, and times and then selects the most effective route. Each of the turns is not selected individually but as a component of a larger plan of achieving the goal.

Goal-based agents especially excel in solving problems with multi-step reasoning, such as robotics and autonomous delivery systems, workflow automation, and AI in games. But this smartness has a price; it requires more computation, more data, and the constant assessment of whether a new action is useful to the overall purpose. Goal-based systems represent a milestone in the progression of the hierarchy of AI agents when action becomes strategy. They fill the gap between the instinctive behavior and actual decision-making: the first step towards AI that does not merely act but also knows why it is acting.

Utility-Based Agents

In case goal-based agents are aware of where they wish to go, then utility-based agents are aware of how to select the most appropriate path to that destination. They don’t simply have an objective in mind; they consider all the possible courses of action, and they evaluate the potential goodness or badness of the results in front of them. Put differently, they do not just arrive at the destination, but they desire to arrive well.

Think of planning a trip. A goal-based system may take the fastest path between A and B. But a utility-based reasoner would analyze even more: must I not go on toll roads? Would I like to take the scenic route, or is it more fuel-saving these days? It considers every alternative based on a utility function, which is a type of score of the desirability of an outcome.

This is why utility-based agents are best suited to those situations where trade-offs are important, when there is no single “right” answer, only better or worse answers based on the circumstances. For example, in finance, an algorithmic trading agent doesn’t simply target to make a profit. It works out what to expect, quantifies risk, and selects the course of action that produces the greatest gain in the long run and the least possible loss.

Utility-based agents are also more flexible and adaptive compared to simple goal-chasers because they always weigh alternatives. They are able to change strategies according to the change in priorities without having to pursue a single direction. However, that added intelligence makes them more difficult to construct as well because it’s unusual to define a utility function that actually represents human or business preferences.

Learning Agents

When utility-based agents are interested in making the best possible choice, then the learning agents go a step further: they learn to make better choices as they go. Instead of following a set of rules or goals, they observe, adjust, and perfect themselves with experience. Everything will be learned, even mistakes will be a lesson to do better.

You may consider a learning agent as a new worker who is under training. They may stick to the words of the handbook on the first day. Several weeks later, they begin to see some patterns: what works and what doesn’t, and how to do things more quickly. They will eventually cease depending on instructions whatsoever, and they will start anticipating what should occur next. It is precisely the manner in which a learning agent changes: as it continuously expands its perception of the surrounding world and advances in terms of decision-making.

Technically, a learning agent consists of four fundamental components: a performance element, a critic element, a learning element, and problem-generating components. A combination of these components forms a feedback loop, which enables the agent to get smarter with each interaction. Such an agent is well adapted to dynamic and unpredictable situations, where the rules are subject to change, data evolves, and human behavior does vary with time.

Learning agents are the most adaptive members of the AI family. They have the ability to learn patterns to which no human has been explicitly taught and enhance themselves. In many ways, the evolution of AI itself mirrors the journey of a learning agent. Thus, they are the key to the implementation of modern applications such as autonomous vehicles, fraud detection, or intelligent assistants. Naturally, this flexibility is accompanied by problems as well. They cannot achieve their potential without an enormous amount of data, time, and feedback.

Multi-Agent Systems (MAS)

When a learning agent is able to think and adapt independently, then a multi-agent system (MAS) is a kind of AI agent that occurs when a great number of such agents collaborate towards the same goal. MAS does not focus on a single intelligent agent attempting to manage everything, but rather disperses intelligence among many specialized agents, each having its own role, perspective, and purpose, and allows them to cooperate, bargain, or even compete to accomplish things.

This architecture is particularly mighty in the complex, dynamic environments in which decisions must be made at the same time on a variety of levels. To take the case of autonomous delivery drones in a fleet, each drone would have to avoid obstacles, maintain its battery, and follow its route, but again, all drones exchange data to orchestrate the best delivery times and prevent collisions. Another real-life application is the smart energy grid, where various agents manage electricity demand, production, and pricing effectively in real time to avoid overloads and to maximize their efficiency.

The peculiarity of MAS is that it is able to model social behavior of cooperation, competition, and negotiation in artificial systems. Agents are able to form alliances, share tasks, or change their strategies according to other agents, and this is similar to human organizations. By this collective intelligence, systems can evolve to large sizes: a more complicated task only requires adding a few more agents, rather than straining a single central brain.

Multi-agent systems are the society of artificial intelligence: a world in which the smallest units play their part in something bigger through communication and coordination. As increasingly complex and interrelated AI projects emerge, this method will provide an idea of what the future of autonomous collaboration might be: not one all-knowing machine, but a network of smart agents collaborating, all contributing their bit to make the entire system smarter.

Practical Applications: Matching Agent to Industry

To give you a clear image, the following table provides the industry-specific applications for each types of AI agents.

| Industry/Workflow | The Problem | Recommended Agent Type | Why It Works Best |

|---|---|---|---|

| E-commerce Checkout | Real-time fraud scoring for transactions. | Model-Based Reflex Agent | Fast, high-volume action based on recognizing historical patterns. |

| Supply Chain/Logistics | Dynamic route optimization to reduce fuel cost and time. | Utility-Based Agent | Can balance competing factors like time, cost, and capacity to find the best solution. |

| Customer Support | Handling complex, multi-step inquiries and learning from past resolutions. | Learning Agent / Multi-Agent System | Needs to adapt to new issues and improve its knowledge base autonomously. |

| Smart Manufacturing | Simple machine operation control (e.g., stopping when a sensor fault occurs). | Simple Reflex Agent | Requires rapid, rule-based reaction to a single, critical input. |

| Sales & Lead Qualification | Researching a prospect, synthesizing the data, and generating a briefing document. | Goal-Based Agent | Needs to execute a clear, sequential plan to achieve a defined output. |

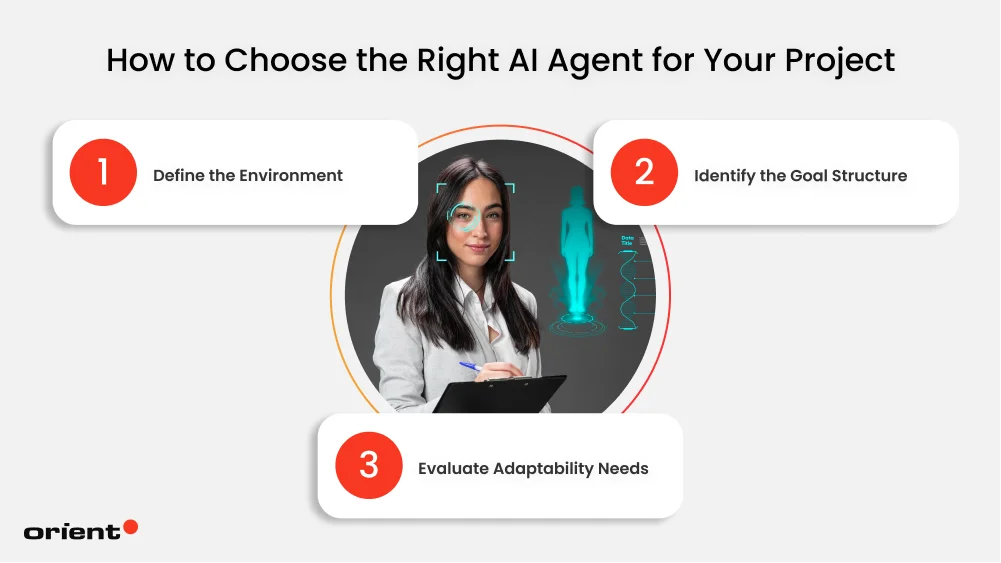

How to Choose the Right AI Agent for Your Project

Step 1: Define the Environment

The initial step is to get viciously truthful about the actual behavior of your surroundings, not how you think it should behave in a controlled demonstration. That is, to see the flow of information, how frequently your system is changing conditions, and how much of that change you can detect in real time. When all the relevant variables are measurable by sensors, logs, or APIs, a simple reflex agent can shine, as it can always observe the entire state before taking some action.

However, visibility is often partial, delayed, or noisy in practical situations. In order to define the environment appropriately, stress-test perception is necessary. Control data dropouts or fake sensor failures, or inject conflicting inputs, and observe whether your system can still behave rationally. When the behavior collapses, you will find yourself in a partially observable space, which will need a model-based reflex agent, one that will build an internal model of the world to fill in the gaps. It is not an academic diagnosis but an operational diagnosis. How you specify the environment will make the difference between your agent responding, reasoning, or making a mere guess.

Step 2: Identify the Goal Structure

As soon as you know what the agent is capable of seeing, the next question is, “Which way up ahead does it have to reason?” This is a misunderstood thing by many teams when they are action-oriented, not consequence-oriented. It will be smarter to determine the time horizon of the impact: what will change now that will be relevant an hour, a day, or a quarter later?

When the output of the system generates immediate and isolated responses, such as sorting messages or flagging transactions or routing support tickets, then a reflex agent or a model-based reflex agent is the best model. It maximizes the local efficiency. However, in the event that the current action changes the alternatives of tomorrow, that is, in resource allocation, pricing, or long-run logistics, your agent must act within a goal or utility grid.

With such systems, all actions should not only be considered in terms of their immediate impact but also in terms of their ability to bring the system to an intended future state. A goal-based agent will strive to achieve a specific goal, whereas a utility-based agent evaluates conflicting results and chooses to follow the one that will yield the highest utility. This difference might look abstract, yet that is a competitive advantage: firms that understand their utility functions properly are not merely automated but are strategic over time and uncertainty.

Step 3: Evaluate Adaptability Needs

Having mapped the environment and goal horizon, you are confronted with the adaptability question: how much freedom can you offer your agent to develop? There is the urge to collapse to “learning agents” since they are more advanced-sounding, yet performance can be disrupted by lack of regulation. The point is to measure the volatility: with what frequency does your data distribution change, and what is the magnitude of the changes?

Provided the environment is structurally stable, such as in automating document processing or system monitoring, the use of fixed rule sets can be better than the use of adaptive models since it ensures consistency and is interpretable. Nonetheless, in dynamic situations where behavior patterns drift, e.g., fraud detection, customer engagement, or predictive maintenance, the agents should be able to learn on the fly through the new feedback.

In this instance, a learning agent combines a learning element that leads to the refinement of its model using past interactions and a trade-off between exploration and exploitation. The most enlightening thing that most teams fail to understand is that learning is not a property but rather a liability unless managed. You require checkpoints, retraining (versioned), and sandbox testing to make sure improvements do not derail reliability. That is to say that adaptability should be designed and not expected.

The Multi-Agent Consideration (Bonus)

Even an optimal single agent reaches a limit when the task space gets too wide. In complex systems, such as end-to-end supply chain management or autonomous research assistants, it is not best to have a single, all-knowing agent but instead to organize a number of specialized agents. The agents have a limited domain: data gathering, argumentation, action, and surveillance. Such orchestration is now feasible through schemes such as CrewAI and AutoGen, which allow the agents to communicate, delegate, and criticize each other’s work.

The actual trick is not technical: it’s managerial. You must establish the way in which agents bargain control, exchange intermediate context, and overcome conflict. When implemented correctly, this setup is reminiscent of a high-functioning organization: a number of autonomous AI agents, each responsible for its area and oriented towards the same goal. When it becomes no longer linear but begins to compound, that is when it becomes intelligent.

Starting Your Agentic Journey

The best AI agents are not the ones that use the largest number of parameters or the most complex architecture: they are the ones that fit the real shape of your problem. The greatest strategic mistake is not the selection of a poor algorithm but excessive engineering of intelligence without an appreciation of what type of environment, feedback, and control loops it really requires.

The clever move is to begin little, not because small means simple, but because it reveals truth. A well-designed Reflex Agent or Model-Based Agent can be your proof of concept: a controlled experiment to determine how your environment responds, where your data bottlenecks are, and how predictable your dynamics of operation actually are. As soon as this foundation has become stable, it is a question of design development rather than conjecture to scale to goal-based, utility-based, or learning agents. You will see perfectly what further intelligence is worth developing and what would only produce waste.

Building AI agents isn’t a pursuit of sophistication: it’s a matter of designing the ability to match the capability with the context. In striking that harmony, complexity arises of its own self, not as the ornament, but as the need. Are you willing to create your first AI agent? Get in touch with Orient Software, map your project to the best agent architecture, and translate your AI strategy into something that actually works in the field.